每天一个爬虫练习,爬个比较简单的网站

import requests

from bs4 import BeautifulSoup

import pandas as pd

import time

def get_data(url):

resp = requests.get(url)

html = resp.content.decode('gbk')

soup = BeautifulSoup(html, 'html.parser')

tr_list = soup.find_all('tr')

dates, conditions, tempmin, tempmax = [], [], [], []

for data in tr_list[1:]:

sub_data = data.text.split()

dates.Append(sub_data[0])

conditions.append(''.join(sub_data[1:3]))

tempmax.append(sub_data[3])

tempmin.append(sub_data[5])

_data = pd.DataFrame()

_data["日期"] = dates

_data['天气状况'] = conditions

_data['最高气温'] = tempmax

_data['最低气温'] = tempmin

return _data

#data_1_month = get_data('此处为任一月份数据的网址') 使用时只需修改此处,具体示例如下

print('正在抓取1月份数据')

data_1_month = get_data('http://www.tianqihoubao.com/lishi/huichang/month/201101.html')

print('正在抓取2月份数据')

data_2_month = get_data('http://www.tianqihoubao.com/lishi/huichang/month/201102.html')

print('正在抓取3月份数据')

data_3_month = get_data('http://www.tianqihoubao.com/lishi/huichang/month/201103.html')

print('正在抓取4月份数据')

data_4_month = get_data('http://www.tianqihoubao.com/lishi/huichang/month/201104.html')

print('正在抓取5月份数据')

data_5_month = get_data('http://www.tianqihoubao.com/lishi/huichang/month/201105.html')

print('正在抓取6月份数据')

data_6_month = get_data('http://www.tianqihoubao.com/lishi/huichang/month/201106.html')

print('正在抓取7月份数据')

data_7_month = get_data('http://www.tianqihoubao.com/lishi/huichang/month/201107.html')

print('正在抓取8月份数据')

data_8_month = get_data('http://www.tianqihoubao.com/lishi/huichang/month/201108.html')

print('正在抓取9月份数据')

data_9_month = get_data('http://www.tianqihoubao.com/lishi/huichang/month/201109.html')

print('正在抓取10月份数据')

data_10_month = get_data('http://www.tianqihoubao.com/lishi/huichang/month/201110.html')

print('正在抓取11月份数据')

data_11_month = get_data('http://www.tianqihoubao.com/lishi/huichang/month/201111.html')

print('正在抓取12月份数据')

data_12_month = get_data('http://www.tianqihoubao.com/lishi/huichang/month/201112.html')

print('数据抓取成功!正在整合该年数据,请稍后......')

time.sleep(3)

data = pd.concat([data_1_month, data_2_month, data_3_month, data_4_month, data_5_month, data_6_month, data_7_month, data_8_month, data_9_month, data_10_month, data_11_month, data_12_month]).reset_index(drop=True)

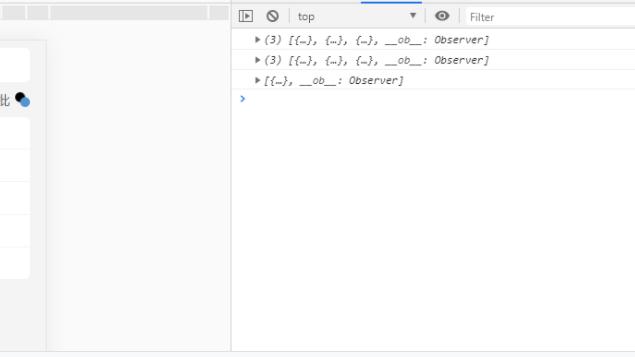

print(data)

print('数据整合完毕!')

#数据爬取结果会保存在此代码同一径下

示例结果: